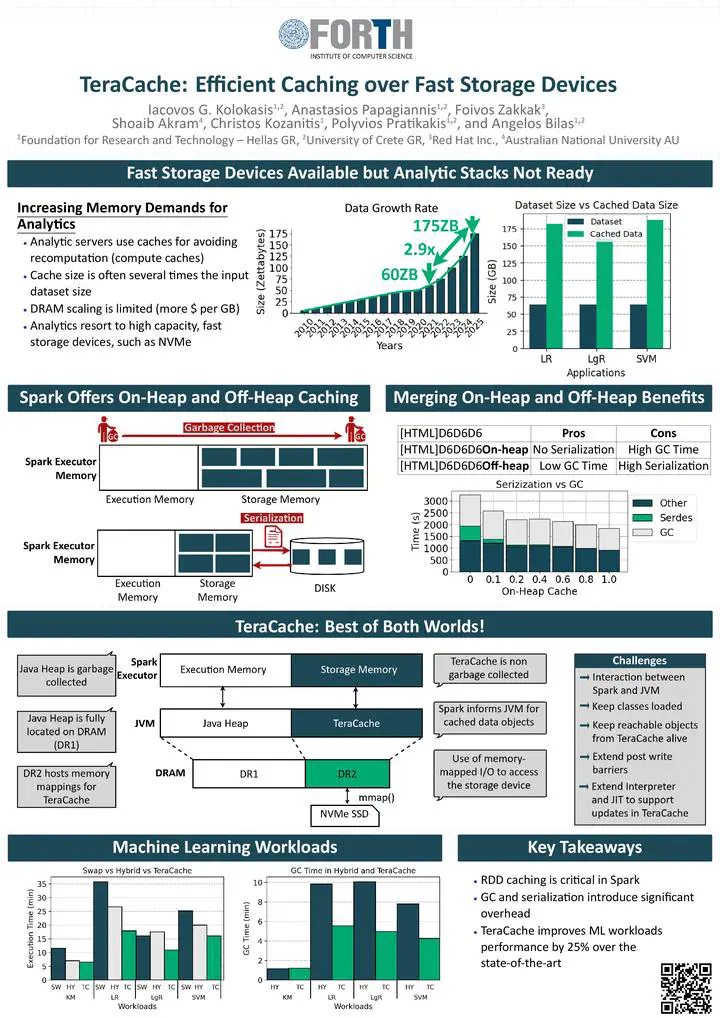

TeraCache: Efficient Caching over Fast Storage Devices

Abstract

Many analytics computations are dominated by iterative processing stages, executed until a convergence condition is met. To accelerate such workloads while keeping up with the exponential growth of data and the slow scaling of DRAM capacity, Spark employs o↵-heap caching of intermediate results. However, o↵- heap caching requires serialization and deserialization (serdes) of data that add significant overhead especially with growing datasets. This thesis proposes TeraCache, an extension of the Spark data cache that avoids the need of serdes by keeping all cached data on-heap but o↵-memory, using memory-mapped I/O (mmio). To achieve this, TeraCache extends the original JVM heap with a managed heap that resides on a memory-mapped fast storage device and is exclusively used for cached data. Preliminary results show that the TeraCache prototype can speed up Machine Learning (ML) workloads that cache intermediate results by up to 37% compared to the state-of-the-art serdes approach.